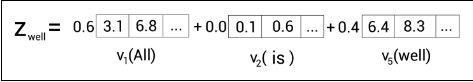

Instead of having a single attention head, we can use multiple attention heads. We learned how to compute the attention matrix , Instead of computing a single attention matrix we can compute multiple attention matrices. But what is the use of computing multiple attention matrices? Let’s understand this with an example. Consider the phrase: Say we need to compute the self-attention of the word ‘well’. After computing the similarity score, suppose we have the following:

As we can observe from the preceding figure, the self-attention value of the word ‘well’ is the sum of the value vectors weighted by the scores. If you look at the preceding figure closely, the attention value of the actual word ‘well’ is dominated by the other word ‘All’. That is, since we are multiplying the value vector of the word ‘All’ by 0.6 and the value vector of the actual word ‘well’ by only 0.4, it implies that will contain 60% of the values from the value vector of the word ‘All’ and only 40% of the values from the value vector of the actual word ‘well’. Thus, here the attention value of the actual word ‘well’ is dominated by the other word ‘All’.

As we can observe from the preceding figure, the self-attention value of the word ‘well’ is the sum of the value vectors weighted by the scores. If you look at the preceding figure closely, the attention value of the actual word ‘well’ is dominated by the other word ‘All’. That is, since we are multiplying the value vector of the word ‘All’ by 0.6 and the value vector of the actual word ‘well’ by only 0.4, it implies that will contain 60% of the values from the value vector of the word ‘All’ and only 40% of the values from the value vector of the actual word ‘well’. Thus, here the attention value of the actual word ‘well’ is dominated by the other word ‘All’.

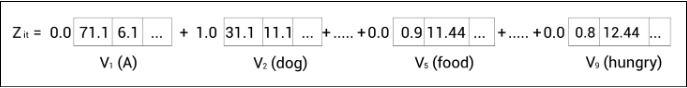

This will be useful only in circumstances where the meaning of the actual word is ambiguous. That is, consider the following sentence: Say we are computing the self-attention for the word ‘it’. After computing the similarity score, suppose we have the following:

As we can observe from the preceding equation, the attention value of the word ‘it’ is just the value vector of the word ‘dog’. Here, the attention value of the actual word ‘it’ is dominated by the word ‘dog’. But this is fine here since the meaning of the word ‘it’ is ambiguous, as it may refer to either ‘dog’ or ‘food’.

Thus, if the value vector of other words dominates the actual word in cases as shown in the preceding example, where the actual word is ambiguous, then this dominance is useful; otherwise, it will cause an issue in understanding the right meaning of the word.

How to compute multi-head attention matrices

So, in order to make sure that our results are accurate, instead of computing a single attention matrix, we will compute multiple attention matrices and then concatenate their results. The idea behind using multi-head attention is that instead of using a single attention head, if we use multiple attention heads, then our attention matrix will be more accurate. Let’s explore this in more detail.

Let’s suppose we are computing two attention matrices and First, let’s compute the attention matrix

Computing attention matrix Z1

We learned that to compute the attention matrix, we create three new matrices, called query, key, and value matrices. To create the query, , key and value matrices, we introduce three new weight matrices called We create the query, key, and value matrices by multiplying the input matrix, X by respectively

Now, the attention matrix can be computed as follows:

Computing attention matrix Z2

We learned that to compute the attention matrix, we create three new matrices, called query, key, and value matrices. To create the query, , key and value matrices, we introduce three new weight matrices called We create the query, key, and value matrices by multiplying the input matrix, X by respectively

Now, the attention matrix can be computed as follows:

Concatenating multiple attention matrices

Similarly we can compute number of attention matrices. Suppose we have eight matrices to ; then we can just concatenate all the attention heads (attention matrices) and multiply the result by a new weight matrix and create the final attention matrix as shown